Test-AutoBuild is the oldest open source project of mine that I still actually work on. The original code dates all the way back to approx the year 2000, when Richard Jones and I were working at a now defunct dot-com called BiblioTech (random archive.org historical link). Rich wrote a script called “Rolling Build” which would continuously checkout & build all our software from CVS, publishing the results as RPMs. Thankfully the company allowed the script to be open sourced under the GPLv2+ and I used it as the basis for creating a project called Test-AutoBuild in ~2004. We expanded the code to cover many different SCM tools, maintain historical archives of builds to avoid rebuilding modules if no code had changed and many other things besides. I did a couple of releases a year for while, but it has been on the backburner for the last couple of years. With the increasing number of inter-related virtualization projects using to libvirt & KVM I decided it was time to put Test-AutoBuild back into use as a build server. Yes there are many of automated build systems in existence these days I could have chosen, but I was looking for an excuse to hack on mine again :-)

There was a quiet, mostly unannounced release 1.2.3 back at the start of August, primarily fix the utterly broken GIT support I originally wrote. A couple of weeks ago, immediately before going on holiday, I uploaded release 1.2.4 to the CPAN distribution page. Aside from fixing a number of horrible bugs, the 1.2.4 release brought in the ability to rsync the build results pages to a remote server, so now it is now possible to run the automated builds inside one (or more) private virtual machines and publish the results to a separate public webserver. The second major change was the incorporation of a new theme for the Test-AutoBuild project website and the build status pages. Previously we had used a pretty lame icon of a gear wheel as the logo and a fairly plain web site style. Looking around for some better ideas I happened to come across a proposal for a Fedora 10 Theme that was never taken up. Nicu Buculei and Máirín Duffy, who produced that artwork, were generous enough to grant me permission to use the graphics for Test-AutoBuild under the CC-BY-SA 3.0 and GPLv2+ licenses. Thus for the 1.2.4 release the status pages for the automated builds have been completely restyled and the project’s main website has been similarly updated.

With the 1.2.4 release out and updated RPMs pushed into Fedora, I’m now able to publish the results of the automated builds I run for nearly all the libvirt related virtualization projects. The builder currently runs in a Fedora 14 virtual machine. The plan is to install further virtual machines running important target OS, at the very least, Debian and one of the BSDs, so we can be sure we aren’t causing regressions in our codebases. If I’m feeling adventurous I might even setup a QEMU PPC instance to run some builds on a non-x86 architecture, though that will probably be painfully slow :-)

I’ve written before about how virtualization causes pain wrt keyboard handling and about the huge number of scancode/keycode sets you have to worry about. Following on from that investigative work I completely rewrote GTK-VNC’s keycode handling, so it is able to correctly translate the keycodes it receives from GTK on Linux, Win32 and OS-X, even when running against a remote X11 server on a different platform. In doing so I made sure that the tables used for doing conversions between keycode sets were not just big arrays of magic numbers in the code, as is common practice across the kernel or QEMU codebase. Instead GTK-VNC now has a CSV file containing the unadulterated mapping data along with a simple script to split out mapping tables. This data file and script has already been reused to solve the same keycode mapping problem in SPICE-GTK.

Fast-forward a year and a libvirt developer from Fujitsu is working on a patch to wire up QEMU’s “sendkey” monitor command to a formal libvirt API. The first design question is how should the API accept the list of keys to be injected to the guest. The QEMU monitor command accepts a list of keycode names as strings, or as keycode values as hex-encoded strings. The QEMU keycode values come from what I term the “RFB” codeset, which is just the XT codeset with a slightly unusual encoding of extended keycodes. VirtualBox meanwhile has an API which wants integer keycode values, from the regular XT codeset.

One of the problems with the XT codeset is that no one can ever quite agree on what is the official way to encode extended keycodes, or whether it is even possible to encode certain types of key. There is also a usability problem with having the API require a lowlevel hardware oriented keycode set as input, in that as an application developer you might know what Win32 virtual keycode you want to generate, but have no idea what the corresponding XT keycode is. It would be preferable if you could simply directly inject a Win32 keycode to a Windows guest, or directly inject a Linux keycode to a Linux guest, etc.

After a little bit of discussion we came to the conclusion that the libvirt API should accept an array of integer keycodes, along with a enum parameter specifying what keycode set they belong to. Internally libvirt would then translate from whatever keycode set the application used, to the keycode set required by the hypervisor’s own API. Thus we got an API that looks like:

typedef enum {

VIR_KEYCODE_SET_LINUX = 0,

VIR_KEYCODE_SET_XT = 1,

VIR_KEYCODE_SET_ATSET1 = 2,

VIR_KEYCODE_SET_ATSET2 = 3,

VIR_KEYCODE_SET_ATSET3 = 4,

VIR_KEYCODE_SET_OSX = 5,

VIR_KEYCODE_SET_XT_KBD = 6,

VIR_KEYCODE_SET_USB = 7,

VIR_KEYCODE_SET_WIN32 = 8,

VIR_KEYCODE_SET_RFB = 9,

VIR_KEYCODE_SET_LAST,

} virKeycodeSet;

int virDomainSendKey(virDomainPtr domain,

unsigned int codeset,

unsigned int holdtime,

unsigned int *keycodes,

int nkeycodes,

unsigned int flags);

As with all libvirt APIs, this is also exposed in the virsh command line tool, via a new “send-key” command. As you might expect, this accepts a list of integer keycodes as parameters, along with a keycode set name. If the keycode set is omitted, we are assuming use of the Linux keycode set by default. To be slightly more user friendly though, for the Linux, Win32 & OS-X keycode sets, we also support symbolic keycode names as an alternative to the integer values. These names are simply the name of the #define constant from corresponding header file.

Some examples of how to use the new virsh command are

# send three strokes 'k', 'e', 'y', using xt codeset

virsh send-key dom --codeset xt 37 18 21

# send one stroke 'right-ctrl+C'

virsh send-key dom KEY_RIGHTCTRL KEY_C

# send a tab, held for 1 second

virsh send-key --holdtime 1000 0xf

So when interacting with virtual guests you now have a choice of how to send fake keycodes. If you have a VNC or SPICE connection directly to the guest in question, you can inject keycodes over that channel, while if you have a libvirt connection to the hypervisor you can inject keycodes over that channel.

A couple of years ago Dan Walsh introduced the SELinux sandbox which was a way to confine what resources an application can access using SELinux and the Linux filesystem namespace functionality. Meanwhile we developed sVirt in libvirt to confine QEMU virutal machines, and QEMU itself has gained support for passing host filesystems straight through to the guest operating system, using a VirtIO based transport for the 9p filesystem. This got me thinking about whether it was now practical to create a sandbox based on QEMU, or rather KVM by booting a guest with a root filesystem pointing to the host’s root filesystem (readonly of course), combined with a couple of overlays for /tmp and /home, all protected by sVirt.

One prominent factor in the practicality is how much time the KVM and kernel startup sequences add to the overall execution time of the command being sandboxed. From Richard Jones‘ work on libguestfs I know that it is possible to boot to a functioning application inside KVM in < 5 seconds. The approach I take with 9pfs has a slight advantage over libguestfs because it does not occur the initial (one-time only per kernel version) delay for building a virtual appliance based on the host filesystem, since we’re able to direct access the host filesystem from the guest. The fine details will have to wait for a future blog post, but suffice to say, a stock Fedora kernel can be made to boot to the point of exec()ing the ‘init’ binary in the ramdsisk in ~0.6 seconds, and the custom ‘init’ binary I use for mounting the 9p filesystems takes another ~0.2 seconds, giving a total boot time of 0.8 seconds.

Boot up time, however, is only one side of the story. For some application sandboxing scenarios, the shutdown time might be just as important as startup time. I naively thought that the kernel shutdown time would be unmeasurably short. It turns out I was wrong, big time. Timestamps on the printk messages showed that the shutdown time was in fact longer than the bootup time ! The telling messages were:

[ 1.486287] md: stopping all md devices.

[ 2.492737] ACPI: Preparing to enter system sleep state S5

[ 2.493129] Disabling non-boot CPUs ...

[ 2.493129] Power down.

[ 2.493129] acpi_power_off called

which point a finger towards the MD driver. I was sceptical that the MD driver could be to blame, since my virtual machine does not have any block devices at all, let alone MD devices. To be sure though, I took a look at the MD driver code to see just what it does during kernel shutdown. To my surprise the answer to blindly obvious:

static int md_notify_reboot(struct notifier_block *this, unsigned long code, void *x)

{

struct list_head *tmp;

mddev_t *mddev;

if ((code == SYS_DOWN) || (code == SYS_HALT) || (code == SYS_POWER_OFF)) {

printk(KERN_INFO "md: stopping all md devices.\n");

for_each_mddev(mddev, tmp)

if (mddev_trylock(mddev)) {

/* Force a switch to readonly even array

* appears to still be in use. Hence

* the '100'.

*/

md_set_readonly(mddev, 100);

mddev_unlock(mddev);

}

/*

* certain more exotic SCSI devices are known to be

* volatile wrt too early system reboots. While the

* right place to handle this issue is the given

* driver, we do want to have a safe RAID driver ...

*/

mdelay(1000*1);

}

return NOTIFY_DONE;

}

In other words, regardless of whether you actually have any MD devices, it’ll impose a fixed 1 second delay into your shutdown sequence :-(

With this kernel bug fixed, the total time my KVM sandbox spends running the kernel is reduced by more than 50%, from 1.9s to 0.9s. The biggest delay is now down to Seabios & QEMU which together take 2s to get from the start of QEMU main(), to finally jumping into the kernel entry point.

Traditionally, if you have manually launched a QEMU process, either as root, or as your own user, it will not be visible to/from libvirt’s QEMU driver. This is an intentional design decision because given an arbitrary QEMU process, it is very hard to determine what its current or original configuration is/was. Without knowing QEMU’s configuration, it is hard to reliably perform further operations against the guest. In general, this limitation has not proved a serious burden to users of libvirt, since there are variety of ways to launch new guests directly with libvirt whether graphical (virt-manager) or command line driven (virt-install).

There are always exceptions to the rule, though, and one group of users who have found this a problem is the QEMU/KVM developer community itself. During the course of developing & testing QEMU code, they often have need to quickly launch QEMU processes with a very specific set of command line arguments. This is hard to do with libvirt, since when there is a choice of which command line parameters to use for a feature, libvirt will pick one according to some predetermined rule. As an example, if you want to test something related to the old style ‘-drive’ parameters with QEMU, and libvirt is using the new style ‘-device + -drive’ parameters, you are out of luck & will not be able to force libvirt to use the old syntax. There are other features of libvirt that QEMU developers may well still want to take advantage of though like virt-top or virt-viewer. Thus it is desirable to have a way to launch QEMU with arbitrary command line arguments, but still use libvirt.

A little while ago we did introduce support for adding extra QEMU specific command line arguments in the guest XML configuration, using a separate namespace. This is not entirely sufficient, or satisfactory for the particular scenario outlined above. For this reason, we’ve now introduced a new QEMU-specific API into libvirt that allows the QEMU driver to attach to an externally launched QEMU process. This API is not in the main library, but rather in the separate libvirt-qemu.so library. Use of this library by applications is strongly discouraged and many distros will not supports its use in production deployments. It is intended primarily for developer / troubleshooting scenarios. This QEMU specific command is also exposed in virsh, via a QEMU specific command ‘qemu-attach‘. So now it is possible for the QEMU developers to launch a QEMU process and connect it to libvirt

$ qemu-kvm \

-cdrom ~/demo.iso \

-monitor unix:/tmp/myexternalguest,server,nowait \

-name myexternalguest \

-uuid cece4f9f-dff0-575d-0e8e-01fe380f12ea \

-vnc 127.0.0.1:1 &

$ QEMUPID=$!

$ virsh qemu-attach $QEMUPID

Domain myexternalguest attached to pid 14725

Once attached, most of the normal libvirt commands and tools will be able to at least see the guest. For example, query its status

$ virsh list

Id Name State

----------------------------------

1 myexternalguest running

$ virsh dominfo myexternalguest

Id: 1

Name: myexternalguest

UUID: cece4f9f-dff0-575d-0e8e-01fe380f12ea

OS Type: hvm

State: running

CPU(s): 1

CPU time: 15.1s

Max memory: 65536 kB

Used memory: 65536 kB

Persistent: no

Autostart: disable

Security model: selinux

Security DOI: 0

Security label: unconfined_u:unconfined_r:unconfined_qemu_t:s0-s0:c0.c1023 (permissive)

libvirt reverse engineers an XML configuration for the guest based on the command line arguments it finds for the process in /proc/$PID/cmdline and /proc/$PID/environ. This is using the same code as available via the virsh domxml-from-native command. The important caveat is that the QEMU process being attached to must not have had its configuration modified via the monitor. If that has been done, then the /proc command line will no longer match the current QEMU process state

$ virsh dumpxml myexternalguest

<domain type='kvm' id='1'>

<name>myexternalguest</name>

<uuid>cece4f9f-dff0-575d-0e8e-01fe380f12ea</uuid>

<memory>65536</memory>

<currentMemory>65536</currentMemory>

<vcpu>1</vcpu>

<os>

<type arch='i686' machine='pc-0.14'>hvm</type>

</os>

<features>

<acpi/>

<pae/>

</features>

<clock offset='utc'/>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>destroy</on_crash>

<devices>

<emulator>/usr/bin/qemu-kvm</emulator>

<disk type='file' device='cdrom'>

<source file='/home/berrange/demo.iso'/>

<target dev='hdc' bus='ide'/>

<readonly/>

<address type='drive' controller='0' bus='1' unit='0'/>

</disk>

<controller type='ide' index='0'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/>

</controller>

<input type='mouse' bus='ps2'/>

<graphics type='vnc' port='5901' autoport='no' listen='127.0.0.1'/>

<video>

<model type='cirrus' vram='9216' heads='1'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/>

</video>

<memballoon model='virtio'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</memballoon>

</devices>

<seclabel type='static' model='selinux' relabel='yes'>

<label>unconfined_u:unconfined_r:unconfined_t:s0-s0:c0.c1023</label>

</seclabel>

</domain>

Tools like virt-viewer will be able to attach to the guest just fine

$ ./virt-viewer --verbose myexternalguest

Opening connection to libvirt with URI

Guest myexternalguest is running, determining display

Guest myexternalguest has a vnc display

Opening direct TCP connection to display at 127.0.0.1:5901

Finally, you can of course kill the attached process

$ virsh destroy myexternalguest

Domain myexternalguest destroyed

The important caveats when using this feature are

- The guest config must not be modified using monitor commands between the time the QEMU process is started and when it is attached to the libvirt driver

- There must be a monitor socket for the guest using the ‘unix’ protocol as shown in the example above. The socket location does not matter, but it must be a server socket

- It is strongly recommended to specify a name using ‘-name’, otherwise libvirt will auto-assign a name based on the $PID

To re-inforce the earlier point, this feature is ONLY targetted at QEMU developers and other people who want to do ad-hoc testing/troubleshooting of QEMU with precise control over all command line arguments. If things break when using this feature, you get to keep both pieces. To re-inforce this, when attaching to an externally launched guest, it will be marked as tainted which may limit the level of support a distro / vendor provides. Anyone writing serious production quality virtualization applications should NEVER use this feature. It may make babies cry and kick cute kittens.

This feature will be available in the next release of libvirt, currently planned to be version 0.9.4, sometime near to July 31st, 2011

With World IPv6 Day last week there have been a few people trying to setup IPv6 connectivity for their virtual guests with libvirt and KVM. For those whose guests are using bridged networking to their LAN, there is really not much to say on the topic. If your LAN has IPv6 enabled and your virtualization host is getting a IPv6 address, then your guests can get IPv6 addresses in exactly the same manner, since they appear directly on the LAN. For those who are using routed / NATed networking with KVM, via the “default” virtual network libvirt creates out of the box, there is a little more work todo. That is what this blog posting will attempt to illustrate.

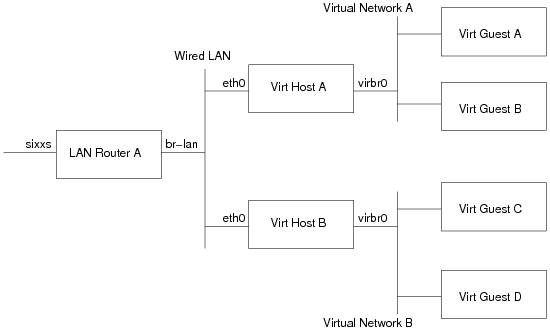

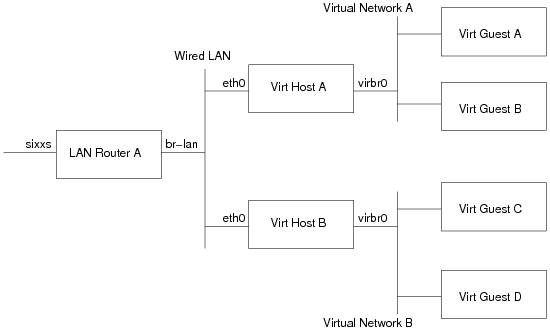

Network architecture

Before continuing further, it probably helps to have a simple network architecture diagram

- LAN Router A: This is the machine which provides IPv6 connectivity to machines on the wired LAN. In my case this is a LinkSys WRT 54GL, running OpenWRT, with an IPv6 tunnel + subnet provided by SIXXS

- Virt Host A/B: These are the 2 hosts which run virtual machines. They are initially getting their IPv6 addresses via stateless autoconf, thanks to the RADVD program on LAN Router A sending out route advertisements.

- Virtual Network A/B: These are the libvirt default virtual networks on Virt Hosts A/B respectively. Out of the box, they both come with a IPv4 only, NAT setup under 192.168.122.0/24 using bridge virbr0.

- Virt Guest A/B: These are the virtual machines running on Virt Host A

- Virt Guest C/D: These are the virtual machines running on Virt Host B

The goal is to provide IPv6 connectivity to Virt Guests A, B, C and D, such that every guest can talk to every other guest, every other LAN host and the internet. Every LAN host, should also be able to talk to every Virt Guest, and (firewall permitting) any machines on the entire IPv6 internet should be able to connect to any guest.

Initial network configuration

The initial configuration of the LAN is as follows (NB: my real network addresses have been substituted for fake ones). Details on this kind of setup can be found in countless howtos on the web, so I won’t get into details on how to configure your LAN/tunnel. All that’s important here is the network addressing in use

LAN Router A

The network interface for the AICCU tunnel to my SIXXS POP is called ‘sixxs’, and was assigned the address 2000:beef:cafe::1/64. The ISP also assigned a nice large subnet for use on the site 2000:dead:beef::/48. The interface for the Wired LAN is called ‘br-lan’. Individual networks are usually assigned a /64, so with the /48 assigned, I have enough addresses to setup 65336 different networks within my site. Skipping the ‘0’ network, for sake of clarity, we give the Wired LAN the second network, 2000:dead:beef:1::/64, and configure the interface ‘br-lan’ with the global IPv6 address 2000:dead:beef:1::1/64. It also has a link local address of fe80::200:ff:fe00:0/64. To allow hosts on the Wired LAN to get Ipv6 addresses, the router is also running the radvd daemon, advertising the network prefix. The radvd config on the router looks like

interface br-lan

{

AdvSendAdvert on;

AdvManagedFlag off;

AdvOtherConfigFlag off;

prefix 2000:dead:beef:1::/64

{

AdvOnLink on;

AdvAutonomous on;

AdvRouterAddr off;

};

};

The address/route configuration looks like this

# ip -6 addr

1: lo: <LOOPBACK,UP>

inet6 ::1/128 scope host

2: br-lan: <BROADCAST,MULTICAST,UP>

inet6 fe80::200:ff:fe00:0/64 scope link

inet6 2000:dead:beef:1::1/64 scope global

# ip -6 route

2000:beef:cafe::/64 dev sixxs metric 256 mtu 1280 advmss 1220

2000:dead:beef:1::/64 dev br-lan metric 256 mtu 1500 advmss 1440

fe80::/64 dev br-lan metric 256 mtu 1500 advmss 1440

ff00::/8 dev br-lan metric 256 mtu 1500 advmss 1440

default via 2000:beef:cafe:1 dev sixxs metric 1024 mtu 1280 advmss 1220

Virt Host A

The network interface for the wired LAN is called ‘eth0’ and is getting an address via stateless autoconf. The NIC has a MAC address 00:11:22:33:44:0a, so it has a link-local IPv6 address of fe80::211:22ff:fe33:440a/64, and via auto-config gets a global address of 2000:dead:beef:1:211:22ff:fe33:440a/64. The address/route configuration looks like

# ip -6 addr

1: lo: mtu 16436

inet6 ::1/128 scope host

3: wlan0: mtu 1500 qlen 1000

inet6 2000:dead:beaf:1:211:22ff:fe33:440a/64 scope global dynamic

inet6 fe80::211:22ff:fe33:440a/64 scope link

# ip -6 route

2000:dead:beaf:1::/64 dev wlan0 proto kernel metric 256 mtu 1500 advmss 1440

fe80::/64 dev wlan0 proto kernel metric 256 mtu 1500 advmss 1440

default via fe80::200:ff:fe00:0 dev wlan0 proto static metric 1024 mtu 1500 advmss 1440

Virt Host B

The network interface for the wired LAN is called ‘eth0’ and is getting an address via stateless autoconf. The NIC has a MAC address 00:11:22:33:44:0b, so it has a link-local IPv6 address of fe80::211:22ff:fe33:440b/64, and via auto-config gets a global address of 2000:dead:beef:1:211:22ff:fe33:440b/64.

# ip -6 addr

1: lo: mtu 16436

inet6 ::1/128 scope host

3: wlan0: mtu 1500 qlen 1000

inet6 2000:dead:beaf:1:211:22ff:fe33:440b/64 scope global dynamic

inet6 fe80::211:22ff:fe33:440b/64 scope link

# ip -6 route

2000:dead:beaf:1::/64 dev wlan0 proto kernel metric 256 mtu 1500 advmss 1440

fe80::/64 dev wlan0 proto kernel metric 256 mtu 1500 advmss 1440

default via fe80::200:ff:fe00:0 dev wlan0 proto static metric 1024 mtu 1500 advmss 1440

Adjusted network configuration

Both Virt Host A and B have a virtual network, so the first thing that needs to be done is to assign a IPv6 subnet for each of them. The /48 subnet has enough space to create 65336 /64 networks, so we just need to pick a couple more. In this we’ll assign 2000:dead:beef:a::/64 to the default virtual network on Virt Host A, and 2000:dead:beef:b::/64 to Virt Host B.

LAN Router A

The first configuration task is to tell the LAN router how each of these new networks can be reached. This requires adding a static route for each subnet, directing traffic to the link local address of the respective Virt Host. You might think you can use the global address of the virt host, rather than its link local address. I had tried that at first, but strange things happened, so sticking to the link local addresses seems best

2000:dead:beef:a::/64 via fe80::211:22ff:fe33:440a dev br-lan metric 256 mtu 1500 advmss 1440

2000:dead:beef:b::/64 via fe80::211:22ff:fe33:440b dev br-lan metric 256 mtu 1500 advmss 1440

One other thing to beware of is that the ‘ip6tables’ FORWARD chain may have a rule which prevents forwarding of traffic. Make sure traffic can flow to/from the 2 new subnets. In my case I added a generic rule that allows any traffic that originates on br-lan, to be sent back on br-lan. Traffic from ‘sixxs’ (the public internet) is only allowed in if it is related to an existing connection, or a whitelisted host I own.

Virt Host A

Remember how all hosts on the wired LAN can automatically configure their own IPv6 addresses / routes using stateless autoconf, thanks to radvd. Well, unfortunately, now that the virt host is going to start routing traffic to/from the guest you can’t use autoconf anymore :-( So the first job is to alter the host eth0 configuration, so that it uses a statically configured IPv6 address for eth0, or gets an address from DHCPv6. If you skip this bit, things may appear to work fine at first, but next time you reconnect to the LAN, autoconf will fail.

Now that the host is not using autoconf, it is time to reconfigure libvirt to add IPv6 to the virtual network. To do this, we stop the virtual network, and then edit its XML

# net-destroy default

# net-edit default

....vi launches..

In the editor, we need to insert a snippet of XML giving details of the subnet assigned to this host, 2000:dead:beef:a::/64. Again we pick address ‘1’ for the host interface, virbr0.

<ip family='ipv6' address='2000:dead:beef:a::1' prefix='64'/>

After exiting the editor, simply start the network again

# net-start default

If all went to plan, virbr0 will now have an IPv6 address, and a route will be present. There will also be an radvd process running on the host advertising the network.

/usr/sbin/radvd --debug 1 --config /var/lib/libvirt/radvd/default-radvd.conf --pidfile /var/run/libvirt/network/default-radvd.pid-bin

If you look at the auto-generated configuration file, it should contain something like

interface virbr0

{

AdvSendAdvert on;

AdvManagedFlag off;

AdvOtherConfigFlag off;

prefix 2a01:348:157:1::1/64

{

AdvOnLink on;

AdvAutonomous on;

AdvRouterAddr off;

};

};

Virt Host B

As with the previous Virt Host A, the first configuration task is to switch the host eth0 from using autoconf, to a static IPv6 address configuration, or DHCPv6. Then it is simply a case of running the same virsh commands, but using this host’s subnet, 2000:dead:beef:b::/64

<ip family='ipv6' address='2000:dead:beef:b::1' prefix='64'/>

The guest setup

At this stage, it should be possible to ping & ssh to the address of the ‘virbr0’ interface of each virt host, from anywhere on the wired LAN. If this isn’t working, or if ping works, but ssh fails, then most likely the LAN router has missing/incorrect routes for the new subnets, or there is a firewall blocking traffic on the LAN router, or Virt Host A/B. In particular check the FORWARD chain in ip6tables.

Assuming, this all works though, it should now be possible to start some guests on Virt Host A / B. In this case the guest will of course have a NIC configuration that uses the ‘default’ network:

<interface type='network'>

<mac address='52:54:00:e6:1f:01'/>

<source network='default'/>

</interface>

Starting up this guest, autoconfiguration should take place, resulting in it getting an address based on the virtual network prefix and the MAC address

# ip -6 addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436

inet6 ::1/128 scope host

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qlen 1000

inet6 2000:dead:beef:a:5054:ff:fee6:1f01/64 scope global dynamic

inet6 fe80::5054:ff:fee6:1f01/64 scope link

# ip -6 route

2000:dead:beef:a::/64 dev eth0 proto kernel metric 256 mtu 1500 advmss 1440

fe80::/64 dev eth0 proto kernel metric 256 mtu 1500 advmss 1440

default via fe80::211:22ff:fe33:440a dev eth0 proto kernel metric 1024 mtu 1500 advmss 1440

A guest running on Host A ought to be able to connect to a guest on Host B, and vica-verca, and any host on the LAN should be able to connect to any guest.