A common question from developers/users new to lbvirt, is to wonder what the benefit of using libvirt is, over directly scripting/developing against QEMU/KVM. This blog posting attempts to answer that, by outlining features exposed in the libvirt QEMU/KVM driver that would not be automatically available to users of the lower level QEMU command line/monitor.

“All right, but apart from the sanitation, medicine, education, wine, public order, irrigation, roads, the fresh water system and public health, what have the Romans ever done for us?”

Insurance against QEMU/KVM being replaced by new $SHINY virt thing

Linux virtualization technology has been through many iterations. In the beginning there was User Mode Linux, which was widely used by many ISPs offering Linux virtual hosting. Then along came Xen, which was shipped by many enterprise distributions and replaced much usage of UML. QEMU has been around for a long time, but when KVM was added to the Linux kernel and integrated with QEMU, it became the new standard for Linux host virtualization, often replacing the usage of Xen. Application developers may think

“QEMU/KVM is the Linux virtualization standard for the future, so why do we need a portable API?”

To think this way is ignoring the lessons of history, which are that every virtualization technology that has existed in Linux thus far has been replaced or rewritten or obsoleted. The current QEMU/KVM userspace implementation may turn out to be the exception to the rule, but do you want to bet on that? There is already a new experimental KVM userspace application being developed by a group of LKML developers which may one day replace the current QEMU based KVM userspace. libvirt exists to provide application developers an insurance policy should this come to pass, by isolating application code from the specific implementation of the low level userspace infrastructure.

Long term stable API and XML format

The libvirt API is an append-only API, so once added an existing API wil never be removed or changed. This ensures that applications can expect to operate unchanged across multiple major RHEL releases, even if the underlying virtualization technology changes the way it operates. Likewise the XML format is append-only, so even if the underlying virt technology changes its configuration format, apps will continue to work unchanged.

Automatic compliance with changes in KVM command line syntax “best practice”

As new releases of KVM come out, the “best practice” recommendations for invoking KVM processes evolve. The libvirt QEMU driver attempts to always follow the latest “best practice” for invoking the KVM version it finds

Example: Disk specification changes

- v1: Original QEMU syntax:

-hda filename

- v2: RHEL5 era KVM syntax

-drive file=filename,if=ide,index=0

- v3: RHEL6 era KVM syntax

-drive file=filename,id=drive-ide0-0-0,if=none \

-device ide-drive,bus=ide.0,unit=0,drive=drive-ide0-0-0,id=ide-0-0-0

- v4: (possible) RHEL-7 era KVM syntax:

-blockdev ...someargs... \

-device ide-drive,bus=ide.0,unit=0,blockdev=bdev-ide0-0-0,id=ide-0-0-0

Isolation against breakage in KVM command line syntax

Although QEMU attempts to maintain compatibility of command line flags across releases, there may been cases where options have been removed / changed. This has typically occurred as a result of changes which were in the KVM branch, and then done differently when the code was merged back into the main QEMU GIT repository. libvirt detects what is required for the QEMU binary and uses the appropriate supported syntax:

Example: Boot order changes for virtio.

- v1: Original QEMU syntax (didn’t support virtio)

-boot c

- v2: RHEL5 era KVM syntax

-drive file=filename,if=virtio,index=0,boot=on

- v3: RHEL-6.0 KVM syntax

-drive file=filename,id=drive-virtio0-0-0,if=none,boot=on \

-device virtio-blk-pci,bus=pci0.0,addr=1,drive=drive-virtio0-0-0,id=virtio-0-0-0

- v4: RHEL-6.1 KVM syntax (boot=on was removed in the update, changing syntax wrt RHEL-6.0)

-drive file=filename,id=drive-virtio0-0-0,if=none,bootindex=1 \

-device virtio-blk-pci,bus=pci0.0,addr=1,drive=drive-virtio0-0-0,id=virtio-0-0-0

Transparently take advantage of new features in KVM

Some new features introduced in KVM can be automatically enabled / used by the libvirt QEMU driver without requiring changes to applications using libvirt. Applications thus benefit from these new features without expending any further development effort

Example: Stable machine ABI in RHEL-6.0

The application provided XML requests a generic ‘pc’ machine type. libvirt automatically queries KVM to determine the supported machines types and canonicalizes ‘pc’ to the stable versioned machine type ‘pc-0.12’ and preserves this in the XML configuration. Thus even if host is updated to KVM 0.13, existing guests will continue to run with the ‘pc-0.12’ ABI, and thus avoid potential driver breakage or guest OS re-activation. It also makes it is possible to migrate the guest between hosts running different versions of KVM.

Example: Stable PCI device addressing in RHEL-6.0

The application provided XML requests 4 PCI devices (balloon, 2 disks & a NIC). libvirt automatically assigns PCI addresses to these devices and ensures that every time the guest is launched the exact same PCI addresses are used for each device. It will also manage PCI addresses when doing PCI device hotplug and unplug, so the sequence boot; unplug nic; migrate will result in stable PCI addresses on the target host.

Automatic compliance with changes in KVM monitor syntax best practice

In the same way that KVM command line argument “best practice” changes between releases, so does the monitor “best practice”. The libvirt QEMU drivers aims to always be in compliance with the “best practice” recommendations for the version of KVM being used.

Example: monitor protocol changes

- v1: Human parsable monitor in RHEL5 era or earlier KVM

- v2: JSON monitor in RHEL-6.0

- v3: JSON monitor, with HMP passthrough in RHEL-6.1

Isolation against breakage in KVM monitor commands

Upstream QEMU releases have generally avoided changing monitor command syntax, but the KVM fork of QEMU has not been so lucky when merging changes back into mainline QEMU. Likewise, OS distros sometimes introduce custom commands in their branches.

Example: Method for setting VNC/SPICE passwords

- v1: RHEL-5 using the ‘change’ command only for VNC, or ‘set_ticket’ for SPICE

'change vnc 123456'

'set_ticket 123456'

- v2: RHEL-6.0 using the (non-upstream) ‘__com.redhat__set_password’ for SPICE or VNC

{ "execute": "__com.redhat__set_password", "arguments": { "protocol": "spice", "password": "123456", "expiration": "60" } }

- v4: RHEL-6.1 using the upstream ‘set_password’ and ‘expire_password’ commands

{ "execute": "set_password", "arguments": { "protocol": "spice", "password": "123456" } }

{ "execute": "expire_password", "arguments": { "protocol": "spice", "time": "+60" } }

Security isolation with SELinux / AppArmour

When all guests run under the same user ID, any single KVM process which is exploited by a malicious guest, will result in compromise of all guests running on the host. If the management software runs as the same user ID as the KVM processes, the management software can also be exploited.

sVirt integration in libvirt, ensures that every guest is assigned a dedicated security context, which strictly confines what resources it can access. Thus even if a guest can read/write files based on matching UID/GID, the sVirt layer will block access, unless the file / resource was explicitly listed in the guest configuration file.

Security isolation using POSIX DAC

A additional security driver uses traditional POSIX DAC capabilities to isolate guests from the host, by running as an unprivileged UID and GID pair. Future enhancements will run each individual guest under a dedicated UID.

Security isolation using Linux container namespaces

Future will take advantage of recent advances in Linux container namespace capabilities. Every QEMU process will be placed into a dedicate PID namespace, preventing QEMU seeing any processes on the system, should it be exploited. A dedicated network namespace will block access to all host network devices, only allow access to TAP device FDs, preventing an exploited guest from making arbitrary network connections to outside world. When QEMU gains support for a ‘fd:’ disk protocol, a dedicated filesystem namespace will provide a very secure chrooted environment where it can only use file descriptors passed in from libvirt. UID/GID namespaces will allow separation of QEMU UID/GID for each process, even though from the host POV all processes will be under the same UID/GID.

Avoidance of shell code for most operations

Use of the shell for invoking management commands is susceptible to exploit by users by providing data which gets interpreted as shell metadata characters. It is very hard to get escaping correctly applied, so libvirt has an advanced set of APIs for invoking commands which avoid all use of the shell. They are also able to guarantee that no file descriptors from the management layer leak down into the QEMU process where they could be exploited.

Secure remote access to management APIs

The libvirt local library API can be exposed over TCP using TLS, x509 certificates and/or Kerberos. This provides secure remote access to all KVM management APIs, with parity of functionality vs local API usage. The secure remote access is a validated part of the common criteria certifications

Operation audit trails

All operations where there is an association between a virtual machine and a host resource will result in one or more audit records being generated via the Linux auditing subsystem. This provides a clear record of key changes in the virtualization state. This functionality is typically a mandatory requirement for deployment into government / defence / financial organizations.

Security certification for Common Criteria

libvirt’s QEMU/KVM driver has gone through Common Criteria certification. This provides assurance for the host management virtualization stack, which again, is typically a mandatory requirement for deployment into government / defense related organizations. The core components in this certification are the sVirt security model and the audit subsystem integration

Integration with cgroups for resource control

All guests are automatically placed into cgroups. This allows control of CPU schedular priority between guests, block I/O bandwidth tunables, memory utilization tunables. The devices cgroups controller, provides a further line of defence blocking guest access to char & block devices on the host in the unlikely event that another layer of protection fails. The cpu accounting group will also enable querying of the per-physical CPU utilization for guests, not available via any traditional /proc file.

Integration with libnuma for memory/cpu affinity

QEMU does not provide any native support for controlling NUMA affinity via its command line. libvirt integrates with libnuma and/or sched_setaffinity for memory and CPU pinning respectively

CPU compatibility guarantees upon migration

libvirt directly models the host and guest CPU feature sets. A variety of policies are available to control what features become visible to the guest, and strictly validate compatibility of host CPUs across migration. Further APIs are provided to allow apps to query CPU compatibility ahead of time, and to enable computation of common feature sets between CPUs. No comparable API exists elsewhere.

Host network filtering of guest traffic

The libvirt network filter APIs allow definition of a flexible set of policies to control network traffic seen by guests. The filter configurations are automatically translated into a set of rules in ebtables, iptables and

ip6tables. The rules are automatically created when the guest starts and torn down when it stops. The rules are able to automatically learn the guest IP address through ARP traffic, or DHCP responses from a (trusted) DHCP server. The data model is closely aligned with the DMTF industry spec for virtual machine network filtering

Libvirt ships with a default set of filters which can be turned on to provide ‘clean’ traffic from the guest. ie it blocks ARP spoofing and IP spoofing by the guest which would otherwise DOS other guests on the network

Secure migration data transport

KVM does not provide any secure migration capability. The libvirt KVM driver adds support for tunnelling migration data over a secure libvirtd managed data channel

Management of encrypted virtual disks for guests

An API set for managing secret keys enables a secure model for deploying guests to untrusted shared storage. By separating management of the secret keys from the guest configuration, it is possible for the management app to ensure a guest can only be started by an authorized user on an authorized host, and prevent restarts once it shuts down. This leverages the Qcow2 data encryption capabilities and is the only method to securely deploy guest if the storage admins / network is not trusted.

Seamless migration of SPICE clients

The libvirt migration protocol automatically takes invokes the KVM graphics clients migration commands at the right point in the migration process to relocate connected clients

Integration with arbitrary lock managers

The lock manager API allows strict guarantees that a virtual machine’s disks can only be in use by once process in the cluster. Pluggable implementations enable integration with different deployment scenarios. Implementations available can use Sanlock, POSIX locks (fcntl), and in the future DLM (clustersuite). Leases can be explicitly provided by the management application, or zero-conf based on the host disks assigned to the guests

Host device passthrough

Integration between host device management APIs and KVM device assignment allows for safe passthrough of PCI devices from the host. libvirt automatically ensures that the device is detached from the host OS before guest startup, and re-attached after shutdown (or equivalent for hotplug/unplug). PCI topology validation ensures that the devices can be safely reset, with FLR, Power management reset, or secondary bus reset.

Host device API enumeration

APIs for querying what devices are present on the host. This enables applications wishing to perform device assignment, to determine which PCI devices are available on the host and how they are used by the host OS. eg to detect case where user asked to assign a device that is currently used as the host eth0 interface.

NPIV SAN management

The host device APIs allow creation and deletion of NPIV SCSI interfaces. This in turns provides access to sets of LUNs, whcih can be associated with individual guests. This allows the virtual SCSI HBA to “follow” the guest where ever it may be running

Core dump integration with “crash”

The libvirt core dump APIs allows a guest memory dump to be obtained and saved into a format which can then be analysed by the Linux crash tool.

Access to guest dmesg logs even for crashed guests

The libvirt API for peeking into guest memory regions allows the ‘virt-dmesg’ tool to extract recent kernel log messages from a guest. This is possible even when the guest kernel has crashed and thus no guest agent is otherwise available.

Cross-language accessibility of APIs.

Provides an stable API which allows KVM to be managed from C, Perl, Python, Java, C#, OCaml and PHP. Components of the management stack are not tied into all using the same language

Zero-conf host network access

Out of the box libvirt provides a NAT based network which allows guests to have outbound network access on a host, whether running wired, or wireless, or VPN, without needing any administrator setup ahead of time. While QEMU provides the ‘SLIRP’ networking mode, this has very low performance and does not integrate with the networking filters capabilities libvirt also provides, nor does it allow guests to communicate with each other

Storage APIs for managing common host storage configurations

Few APIs existing for managing storage on Linux hosts. libvirt provides a general purpose storage API which allows use of NFS shares; formatting & use of local filesystems; partitioning of block devices; creation of LVM volume groups and creation of logical volumes within them; enumeration of LUNs on SCSI HBAs; login/out of ISCSI servers & access to their LUNs; automatic discovery of NFS exports, LVM volume groups and iSCSI targets; creation and cloning of non-raw disks formats, optionally including encryption; enumeration of multipath devices.

Integration with common monitoring tools

Plugins are available for munin, nagios and collectd to enable performance monitoring of all guests running on a host / in a network. Additional 3rd party monitoring tools can easily integrate by using a readonly libvirt connection to ensure they don’t interfere with the functional operation of the system.

Expose information about virtual machines via SNMP

The libvirt SNMP agent allows information about guests on a host to be exposed via SNMP. This allows industry standard tools to report on what guests are running on each host. Optionally SNMP can also be used to control guests and make changes

Expose information & control of host via CIM

The libvirt CIM agent exposes information about, and allows control of, virtual machines, virtual networks and storage pools via the industry standard DMTF CIM schema for virtualization.

Expose information & control of host via AMQP/QMF

The libvirt AMQP agent (libvirt-qpid) exposes information about, and allows control of, virtual machines, virtual networks and storage pools via the QMF object modelling system, running on top of AMQP. This allows data center wide view & control of the state of virtualization hosts.

The libvirt library uses a number of approaches to versioning to cover various usage scenarios:

- Software release versions

- Package manager versions (RPM)

- pkgconfig version

- libtool version

- ELF library soname/version

- ELF symbol version

The goal of libvirt is to never change the library ABI, in other words, once an API is added, struct declared, macro declared, etc it can never be changed. If an API was found flawed, the existing API may be annotated as deprecated, but it will never be removed. Instead a new alternative API is added.

Each new software release has an associated 3 component version number, eg 0.6.3, 0.8.0, 0.8.1. There is no strict policy on the significance of each component. At time of writing, the major component has never been changed. The minor component is changed when there is significant new functionality added to the library (usually a major collection of new APIs). The macro component is changed in each release and reset to zero when the minor component changes. So from an application developer’s point of view, if they want to use a particular API they look for the software release version that introduced the new API.

The text that follows often uses libvirt as an example. The principals / effects described apply to any ELF library and are not specific to libvirt.

Simple compile time version checking

Applications building against libvirt will make use of the pkgconfig tool to probe for existance of libvirt. The software release version is used, unchanged, as the pkgconfig version number. Thus if an application knows the API it wants was introduced in version 0.8.3, it will typically perform a compile time check like

$ pkg-config --atleast-version=0.8.3 libvirt \

&& echo "Found" || echo "Missing"

Or in autoconf macros

PKG_CHECK_MODULES([LIBVIRT], [libvirt >= 0.8.3])

This will result in compiler/linker flags that look something like

-lvirt

Or

-I/home/berrange/usr/include -L/home/berrange/usr/lib -lvirt

When the compiler links the application to libvirt, it will take the libvirt ELF soname and embed it in the application. The libvirt ELF soname is simply “libvirt.so.0” and since libvirt policy is to maintain ABI, it will never change.

libtool also includes library versioning magic that is intended to allow for version checks that are independent of the software release version. Few libraries ever fully make use of this capability since it is poorly understood, easy to get wrong and doesn’t integrate with the rest of the toolchain / OS distro. libvirt sets libtool version based on the package release version. It won’t be discussed further.

Simple runtime version checking

When an application is launched the ELF loader will attempt to resolve the linked libraries and verify their current soname matches that embedded in the application. Since libvirt will never break ABI, the soname check will always succeed. It should be clear that the soname check is a very weak versioning scheme. The application may have been linked against version 0.8.3, but the installed version it is run against could be 0.6.0 and this mismatch would not be caught by the soname check.

Installation version checking

The software release version is also used unchanged for the package manager version field. In RPM packages there is an extra ‘release’ component appended to the version for extra fine checking.

Thus the package manager can be used to provide stronger runtime version guarantee, by checking the full version number at installation time. The aforementioned example application would typically want to include a dependency

Requires: libvirt >= 0.8.3

The RPM package manager, however, also has support for automatic dependency extraction. For this it will extract the soname from any ELF libraries the application links against and secretly add statements of the form

Requires: libvirt.so.0()(64bit)

The libvirt RPM itself also gains secret statements of the form

Provides: libvirt.so.0()(64bit)

Since this is based on the soname though, these automatic dependencies are a very weak versioning scheme.

Symbol versioning

Most linkers will provide library developers with a way to control which symbols are exported for use by applications. This may be done via annotations in the source file, or more often via a standalone “linker script” which simply whitelists symbols to be exported.

The GNU ELF linker has support for doing more than simply whitelisting symbols. It allows symbols to be grouped together and a version number attached to each group. A group can also be annotated as being a child of another group. In this manner you can create trees of versioned symbols, although most common libraries will only use one path without branching.

The libvirt library uses the software release version to group symbols which were added in that release. The top levels of the libvirt tree are logically arranged like:

+------------------------+

| LIBVIRT_0.0.3 |

+------------------------+

| virConnectClose |

| virConnectOpen |

| virConnectListDomains |

| .... |

+------------------------+

|

V

+------------------------+

| LIBVIRT_0.0.5 |

+------------------------+

| virDomainGetUUID |

| virConnectLookupByUUID |

+------------------------+

|

V

+------------------------+

| LIBVIRT_0.1.0 |

+------------------------+

| virInitialize |

| virNodeGetInfo |

| virDomainReboot |

| .... |

+------------------------+

|

V

....

Runtime version checking with version symbols

When a library is built normally, the ELF headers will contain a list of plain symbol names:

$ eu-readelf -s /usr/lib64/libvirt.so | grep 'GLOBAL DEFAULT' | awk '{print $8}'

virConnectOpen

virConnectClose

virDomainGetUUID

....

When a library is built with versioned symbols though, the name is mangled to include a version string:

$ eu-readelf -s /usr/lib64/libvirt.so | grep 'GLOBAL DEFAULT' | awk '{print $8}'

virConnectOpen@@LIBVIRT_0.0.3

virConnectClose@@LIBVIRT_0.0.3

virDomainGetUUID@@LIBVIRT_0.0.5

....

Similarly when an application is built against a normal library, the ELF headers will contain a list of plain symbol names that it uses:

$ eu-readelf -s /usr/bin/virt-viewer | grep 'GLOBAL DEFAULT' | awk '{print $8}'

virConnectOpenAuth

virDomainFree

virDomainGetID

And as expected, when built against an library with versioned symbols, the application ELF headers will contained symbols mangled to include a version string:

$ eu-readelf -s /usr/bin/virt-viewer | grep 'GLOBAL DEFAULT' | awk '{print $8}'

virConnectOpenAuth@LIBVIRT_0.4.0

virDomainFree@LIBVIRT_0.0.3

virDomainGetID@LIBVIRT_0.0.3

When the application is launched, the linker is now able to perform strict version checking. For each symbol ‘FOO’

- If application listed an unversioned 'FOO'

- If library has an unversioned 'FOO'

=> Success

- Else library has a versioned 'FOO'

=> Success

- Else application listed a versioned 'FOO'

- If library has an unversioned 'FOO'

=> Fail

- Else library has a versioned 'FOO'

- If versions of 'FOO' match

=> Success

- Else versions don't match

=> Fail

Notice that an application with unversioned symbols will work with a versioned libary. A versioned application will not work with an unversioned library. This allows for versioned symbols to be added to be introduced to an existing unversioned library without causing an ABI compatibility problem.

With an application and library both using versioned symbols, the compile time requirement the developer declared to pkgconfig with “libvirt >= 0.8.3” is now effectively encoded in the binary via symbol versioning. Thus a fairly strict check can be performed at runtime to ensure the correct library is installed.

Installation version checking with versioned symbols

Remember that the RPM automatic dependencies extractor will find the ELF soname in libraries/applications. When using versioned symbols, it can go one step further and find the symbol version strings too.

The libvirt RPM will thus automatically get extra versioned dependencies

$ rpm -q libvirt-client --provides | grep libvirt

libvirt.so.0()(64bit)

libvirt.so.0(LIBVIRT_0.0.3)(64bit)

libvirt.so.0(LIBVIRT_0.0.5)(64bit)

libvirt.so.0(LIBVIRT_0.1.0)(64bit)

libvirt.so.0(LIBVIRT_0.1.1)(64bit)

libvirt.so.0(LIBVIRT_0.1.4)(64bit)

libvirt.so.0(LIBVIRT_0.1.5)(64bit)

An application will also automatically get extra data based on the versions of the symbols it links against

$ rpm -q virt-viewer --requires | grep libvirt

libvirt.so.0()(64bit)

libvirt.so.0(LIBVIRT_0.0.3)(64bit)

libvirt.so.0(LIBVIRT_0.0.5)(64bit)

libvirt.so.0(LIBVIRT_0.1.0)(64bit)

libvirt.so.0(LIBVIRT_0.4.0)(64bit)

libvirt.so.0(LIBVIRT_0.5.0)(64bit)

This example shows that this virt-viewer cannot run against this libvirt, since the libvirt library is missing versions 0.4.0 and 0.5.0

Thus, there is now rarely any need for an application developer to manually list dependencies against the libvirt library. ie they can remove any line like

Requires: libvirt >= 0.8.3

RPM will determine the minimum version automatically. This is good, because application developers will often forget to update these manually stated deps. The only possible exception to this, is where the

application needs to avoid a implementation bug in a particular version of libvirt and so request a version that is newer than that indicated by the symbol versions. In practice this isn’t very useful for libvirt, since most libvirt drivers have a client server model and the RPM dependency only validates the client, not the server.

The perils of backporting APIs with versioned symbols

It is fairly common when maintaining software in Linux distributions to want to backport code from a newer release, rather than rebase the entire package. Most maintainers will only attempt this for bug fixes or security fixes, but sometimes there is demand to backport new features, and even new APIs. From the descriptions shown above it should be clear that there are a number of serious downsides associated with backporting new APIs.

Impact on compile time pkgconfig checks

Application developers look at the version where a symbol was introduced and encode that in a pkgconfig check at compile time. If an OS distribution backports a symbol to an earlier version, the application developer has to now create OS-distro specific checks for the presence of the backported API. This is a retrograde step for the application developer, because the primary reason for using pkgconfig is that it is OS-distro *independent*

Next consider what happens to the libvirt.so symbols when an API is backported. There are three possible approaches. Keep the original symbol version, or adopt the symbol version of the release being backported to, or invent a new version. All approaches ultimately fail.

Impact of maintaining the new version with a backport

Consider backporting an API from 0.5.0, to a 0.4.0 release. If the original symbol version is maintained the runtime checks by the ELF library loader will still succeed normally. There is an impact on the install time package manager checks though. Backporting a single API from 0.5.0 release will result in the libvirt-client library gaining

Provides: libvirt.so.0(LIBVIRT_0.5.0)(64bit)

Even if there are many other APIs in 0.5.0 that are not backported. An application which uses an API from the 0.5.0 relase will gain

Requires: libvirt.so.0(LIBVIRT_0.5.0)(64bit)

This application can now be installed against either 0.4.0 + backported API, or 0.5.0. RPM is unable to correctly check requirements at application install time, because it cannot distinguish the two scenarios. The API backport has broken the RPM versioning.

Impact of adopting the older version with a backport

The other option for backporting is thus to adopt the version of the release being backported to. ie change the backported API’s symbol version string from

virEventRegisterImpl@LIBVIRT_0.5.0

To

virEventRegisterImpl@LIBVIRT_0.4.0

The problem here is that an application which was built against a normal libvirt binary will have a requirement for

virEventRegisterImpl@LIBVIRT_0.5.0

Attempting to run this application against the libvirt.so with the backported API will fail, because the linker will see that “LIBVIRT_0.4.0” is not the same as “LIBVIRT_0.5.0”.

All libvirt applications now have to be specially compiled for this OS distro’s libvirt to pick up correct symbol versions. This doesn’t solve the core problem, it merely reverses it. The application binary is now incompatible with a normal libvirt, making it impossible to test it against upstream libvirt releases without rebuilding the application too.

In addition the RPM version checking has once again been broken. An application that needs the backported symbol will have

Requires: libvirt.so.0(LIBVIRT_0.4.0)(64bit)

There is no way for RPM to validate whether this means the plain ‘0.4.0’ release, or the 0.4.0 + backport release.

Changing the symbol version when doing the backport has in effect change the ABI of the library in this particular OS distro.

What is worse, is that this changed symbol version has to be preserved in every single future release of the OS distro. ie applications from RHEL-5 expect to be able to run against and libvirt from RHEL-6, RHEL-7, etc without recompilation.

So changing the symbol version has all the downsides of not changing the symbol version, with an additional permanent maintenance burden of patching versions for every future OS release.

Impact of inventing a new version with a backport

For libraries which only target the Linux ELF loader and no other OS platform there is one other possible trick. The ELF linker script does not require a single linear progression of versioned symbol groups. The tree can have arbitrary branches, provided it remains a DAG. A symbol can also appear in multiple places at once.

This could hypothetically be leveraged during a backport of an API, by introducing a branch in the version tree at which the symbol appears. So instead of the symbol being either

virEventRegisterImpl@LIBVIRT_0.5.0

virEventRegisterImpl@LIBVIRT_0.4.0

It could be (eg based off the RPM release where it was backported).

virEventRegisterImpl@LIBVIRT_0.4.0_1

The RPM will thus gain

Provides: libvirt.so.0(LIBVIRT_0.4.0_1)(64bit)

And applications built against this backport will gain

Requires: libvirt.so.0(LIBVIRT_0.4.0_1)(64bit)

As compared to the first option of maintaining the original symbol version LIBVIRT_0.5.0, the RPM version checking will continue to operate correctly.

This approach, however, still has all the permanent package maintenance problems of the second approach. ie the patch which adds a ‘LIBVIRT_0.4.0_1’ version has to be maintained for every single future release of the OS distro, even once the backport of the actual code has gone. It also means that applications built against the backported library won’t work with an upstream build and vica-versa.

Conclusion for backporting symbols

It has been demonstrated that whatever approach is taken, backporting a API in a library with version symbols, will break either RPM version checking or cause incompatibilities in ELF loader version checking or both. It also requires applications to write OS distro specific checks. For a library that intends to maintain permanent API/ABI stability for application developers this is unacceptable.

The conclusion is clear: never backport APIs from *any* library, especially not one that makes any use of symbol versioning.

If a new API is required, then the entire package should be rebased to the version which includes the desired API.

The previous post described how to setup an iSCSI target on Fedora/RHEL the hard way. This post demonstrates how to configure iSCSI on a libvirt KVM host using virsh and then provison a guest using virt-install.

Defining the storage pool

libvirt manages all storage through an object known as a “storage pool”. There are many types of storage pools SCSI, NFS, ext4, plain directory and, interesting for this article, iSCSI. All libvirt objects are configured via an XML description and storage pools are no exception. For an iSCSI storage pool there are three pieces of information to provide. The “Target path” determines how libvirt will expose device paths for the pool. Paths like /dev/sda, /dev/sdb, etc are not a good choice because they are not stable across reboots, or across machines in a cluster, the names are assigned on a first come, first served basis by the kernel. It is strongly recommended that “/dev/disk/by-path” by used unless you know what you’re doing. This results in a naming scheme that will be stable across all machines. The “Host Name” is simply the fully qualified DNS name of the iSCSI server. Finally the “Source Path” is that adorable IQN seen earlier when creating the iSCSI target (“iqn.2004-04.fedora:fedora13:iscsi.kvmguests“). This isn’t the place to describe the full XML schema for storage pools, it suffices to say that an iSCSI config looks like this

<pool type='iscsi'>

<name>kvmguests</name>

<source>

<host name='myiscsi.server.com'/>

<device path='iqn.2004-04.fedora:fedora13:iscsi.kvmguests'/>

</source>

<target>

<path>/dev/disk/by-path</path>

</target>

</pool>

Save this XML snippet to a file named ‘kvmguests.xml’ and then load it into libvirt using the “pool-define” command.

# virsh pool-define kvmguests.xml

Pool kvmguests defined from kvmguests.xml

# virsh pool-list --all

Name State Autostart

-----------------------------------------

default active yes

kvmguests inactive no

Starting the storage pool

This has saved the configuration, but has not actually logged into the iSCSI target, so no LUNs are yet visible on the virtualization host. Todo this requires running the “pool-start” command, at which point LUNs should be visible using the “vol-list” command:

# virsh pool-start kvmguests

Pool kvmguests2 started

# virsh vol-list kvmguests

Name Path

-----------------------------------------

10.0.0.1 /dev/disk/by-path/ip-192.168.122.2:3260-iscsi-iqn.2004-04.fedora:fedora13:iscsi.kvmguests-lun-1

10.0.0.2 /dev/disk/by-path/ip-192.168.122.2:3260-iscsi-iqn.2004-04.fedora:fedora13:iscsi.kvmguests-lun-2

The volume names shown there are what will be required later in order to install a guest with virt-install.

Querying LUN information

Further information about each LUN can be obtained using the “vol-info” and “vol-dumpxml” commands

# virsh vol-info /dev/disk/by-path/ip-192.168.122.2:3260-iscsi-iqn.2004-04.fedora:fedora13:iscsi.kvmguests-lun-1

Name: 10.0.0.1

Type: block

Capacity: 10.00 GB

Allocation: 10.00 GB

# virsh vol-dumpxml /dev/disk/by-path/ip-192.168.122.2:3260-iscsi-iqn.2004-04.fedora:fedora13:iscsi.kvmguests-lun-1

<volume>

<name>10.0.0.1</name>

<key>/dev/disk/by-path/ip-192.168.122.2:3260-iscsi-iqn.2004-04.fedora:fedora13:iscsi.kvmguests-lun-1</key>

<source>

</source>

<capacity>10737418240</capacity>

<allocation>10737418240</allocation>

<target>

<path>/dev/disk/by-path/ip-192.168.122.2:3260-iscsi-iqn.2004-04.fedora:fedora13:iscsi.kvmguests-lun-1</path>

<format type='unknown'/>

<permissions>

<mode>0660</mode>

<owner>0</owner>

<group>6</group>

<label>system_u:object_r:fixed_disk_device_t:s0</label>

</permissions>

</target>

</volume>

Activating the storage at boot time

Finally, if everything is looking in order, then the pool can be set to start automatically upon host boot.

# virsh pool-autostart kvmguests

Pool kvmguests2 marked as autostarted

Provisioning a guest on iSCSI

The virt-install command is a convenient way to install new guests from the command line. It has support for configuring a guest to use volumes from a storage pool via its –disk argument. This arg takes the name of the storage pool, followed by the name of the volume within it. It is now time to install a guest with two disks, the first exclusive use for its root filesystem, the second to be shareable between several guests for data:

# virt-install --accelerate --name rhel6x86_64 --ram 800 --vnc --hvm --disk vol=kvmguests/10.0.0.1 --disk vol=kvmguests/10.0.0.2,perms=sh --pxe

Once this is up and running, take a look at the guest XML that virt-install used to associate the guest with the iSCSI LUNs:

# virsh dumpxml rhel6x86_64

<domain type='kvm' id='4'>

<name>rhel6x86_64</name>

<uuid>ad8961e9-156f-746f-5a4e-f220bfafd08d</uuid>

<memory>819200</memory>

<currentMemory>819200</currentMemory>

<vcpu>1</vcpu>

<os>

<type arch='x86_64' machine='rhel6.0.0'>hvm</type>

<boot dev='network'/>

</os>

<features>

<acpi/>

<apic/>

<pae/>

</features>

<clock offset='utc'/>

<on_poweroff>destroy</on_poweroff>

<on_reboot>destroy</on_reboot>

<on_crash>destroy</on_crash>

<devices>

<emulator>/usr/libexec/qemu-kvm</emulator>

<disk type='block' device='disk'>

<driver name='qemu' type='raw'/>

<source dev='/dev/disk/by-path/ip-192.168.122.170:3260-iscsi-iqn.2004-04.fedora:fedora13:iscsi.kvmguests-lun-1'/>

<target dev='hda' bus='ide'/>

<alias name='ide0-0-0'/>

<address type='drive' controller='0' bus='0' unit='0'/>

</disk>

<disk type='block' device='disk'>

<driver name='qemu' type='raw'/>

<source dev='/dev/disk/by-path/ip-192.168.122.170:3260-iscsi-iqn.2004-04.fedora:fedora13:iscsi.kvmguests-lun-2'/>

<target dev='hdb' bus='ide'/>

<shareable/>

<alias name='ide0-0-1'/>

<address type='drive' controller='0' bus='0' unit='1'/>

</disk>

<controller type='ide' index='0'>

<alias name='ide0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/>

</controller>

<interface type='network'>

<mac address='52:54:00:0a:ca:84'/>

<source network='default'/>

<target dev='vnet1'/>

<alias name='net0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</interface>

<serial type='pty'>

<source path='/dev/pts/28'/>

<target port='0'/>

<alias name='serial0'/>

</serial>

<console type='pty' tty='/dev/pts/28'>

<source path='/dev/pts/28'/>

<target port='0'/>

<alias name='serial0'/>

</console>

<input type='mouse' bus='ps2'/>

<graphics type='vnc' port='5901' autoport='yes' keymap='en-us'/>

<video>

<model type='cirrus' vram='9216' heads='1'/>

<alias name='video0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/>

</video>

</devices>

</domain>

In particular, notice how the guest uses the huge paths under /dev/disk/by-path to refer to the LUNs, and also that the second disk has the <shareable/> flag set. This ensures the SELinux labelling allows multiple guests to access the disk and that all I/O caching is disabled on the host. Both critically important if you want the disk to be safely shared between guests.

Summing up

To allow migration of guests between hosts, some form of shared storage is required. People often turn to NFS at first, but this has security shortcomings because it does not allow for SELinux labelling, which means that there is limited sVirt protection between guests. ie one guest can access another guests’s disks. By choosing iSCSI as the shared storage platform, full sVirt isolation between guests is maintained on a par with non-shared storage setups. Hopefully this series of iSCSI related blog posts have shown that even provisioning KVM guests on iSCSI the hard way, is not actually very hard at all. It also shows that you don’t need a expensive commercial NAS to make use of iSCSI, any server with a bunch of disks running Fedora or RHEL can easily be turned into an iSCSI storage server for your virtual machines, though you will have to be prepared to get your hands dirty!

The previous articles showed how to provision a guest on iSCSI the nice & easy way using a QNAP NAS and virt-manager. This article and the one that follows, will show how to provision a guest on iSCSI the “hard way”, using the low level command line tools tgtadm, virsh and virt-install. The iSCSI server in this case is going to be provided by a guest running Fedora 13, x86_64.

Enabling the iSCSI target service

The first task is to install and enable the iSCSI target service. On Fedora and RHEL servers, the iSCSI target service is provided by the ‘scsi-target-utils’ RPM package, so install that now and set the service to start on boot

# yum install scsi-target-utils

# chkconfig tgtd on

# service tgtd start

Allocating storage for the LUNs

The Linux SCSI target service does not care whether the LUNs exported are backed by plain files, LVM volumes or raw block devices, though obviously there is some performance overhead from introducing the LVM and/or filesystem layers as compared to block devices. Since the guest providing the iSCSI service in this example has no spare block device or LVM space, raw files will have to be used. In this example, two LUNs will be created one thin provisioned (aka sparse file) 10 GB LUN and one fully allocated 500 MB LUN

# mkdir -p /var/lib/tgtd/kvmguests

# dd if=/dev/zero of=/var/lib/tgtd/kvmguests/rhel6x86_64.img bs=1M seek=10240 count=0

# dd if=/dev/zero of=/var/lib/tgtd/kvmguests/shareddata.img bs=1M count=512

# restorecon -R /var/lib/tgtd

Exporting an iSCSI target and LUNs (the manual way)

Historically, you had to invoke a series of tgtadm commands to setup the iSCSI target and LUNs and then add them to /etc/rc.d/rc.sysinit to make sure they run on every boot. This is true of RHEL5 vintage scsi-target-utils at least. If you have a more recent version circa Fedora 13 / RHEL-6 there is finally a nice configuration file to handle this setup, so those lucky readers can skip ahead. The first step is to add a target, for this the adorable IQNs make a re-appearance

# tgtadm --lld iscsi --op new --mode target --tid 1 --targetname iqn.2004-04.fedora:fedora13:iscsi.kvmguests

Next step is to associate the storage volumes, just created, with LUNs in the iSCSI target.

# tgtadm --lld iscsi --op new --mode logicalunit --tid 1 --lun 1 --backing-store /var/lib/tgtd/kvmguests/rhel6x86_64.img

# tgtadm --lld iscsi --op new --mode logicalunit --tid 1 --lun 2 --backing-store /var/lib/tgtd/kvmguests/shareddata.img

To confirm that all went to plan, query the iSCSI target setup

# tgtadm --lld iscsi --op show --mode target

Target 1: iqn.2004-04.fedora:fedora13:iscsi.kvmguests

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: None

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 10737 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /var/lib/tgtd/kvmguests/rhel6x86_64.img

LUN: 2

Type: disk

SCSI ID: IET 00010002

SCSI SN: beaf12

Size: 537 MB

Online: Yes

Removable media: No

Backing store type: rdwr

Backing store path: /var/lib/tgtd/kvmguests/shareddata.img

Account information:

ACL information:

Finally, allow client access to the target. This example allows access to all clients without any authentication.

# tgtadm --lld iscsi --op bind --mode target --tid 1 --initiator-address ALL

Exporting an iSCSI target and LUNs (with a config file)

As mentioned earlier, modern versions of scsi-target-utils now include a configuration file for setting up targets and LUNs. The master configuration is /etc/tgt/targets.conf and is full of example configurations. To replicate the manual setup from above requires adding a configuration block that looks like this

<target iqn.2004-04.fedora:fedora13:iscsi.kvmguests>

backing-store /var/lib/tgtd/kvmguests/rhel6x86_64.img

backing-store /var/lib/tgtd/kvmguests/shareddata.img

</target>

With the configuration update, load it into the iSCSI target daemon

#tgt-admin --execute

Two common mistakes

The two most likely places to trip up when configuring the iSCSI target are SELinux and iptables. If adding plain files as LUNs in an iSCSI target, make sure the files are labelled suitably with system_u:object_r:tgtd_var_lib_t:s0. For iptables, ensure that port 3260 is open.

That is a very quick guide to setting up an iSCSI target on Fedora 13. The next step is to switch back to the virtualization host and provision a new guest using iSCSI for its virtual disk. This is covered in Part II

Part I of this posting, walked through the steps to create a iSCSI target and LUN on a QNAP server. Part II now considers provisioning guests using iSCSI storage from virt-manager.

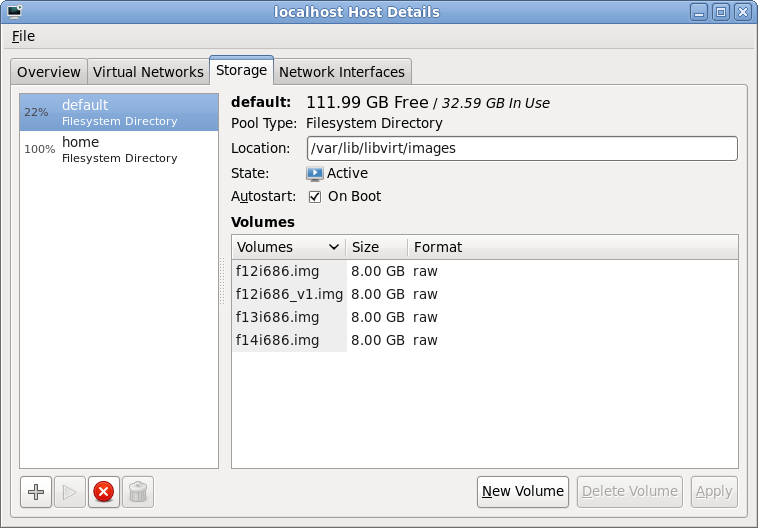

Storage pool management

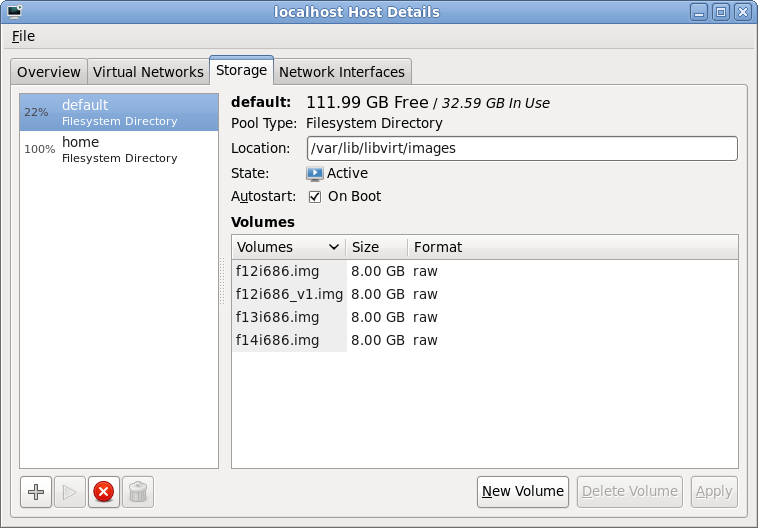

After launching virt-manager and connecting to the desired hypervisor, QEMU/KVM in my case, the first step is to open up the storage management pane. From the main virt-manager window, this can be found by selecting the menu “Edit -> Host Details” and then navigating to the “Storage” tab. The host shown here already has two storage pools configured, both pointing at local filesystem directories. The “default” storage pool will usually be visible on all libvirt hosts managed by virt-manager and lives in /var/lib/libvirt/images. This isn’t much use if you plan to migrate guests between machines, because some form of shared storage is required between the hosts. This is where iSCSI comes into the equation. To add a iSCSI storage pool, click the “+” button in the bottom-left of the window

Storage pool list

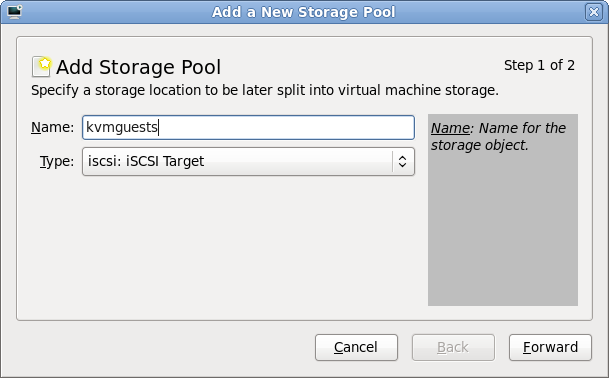

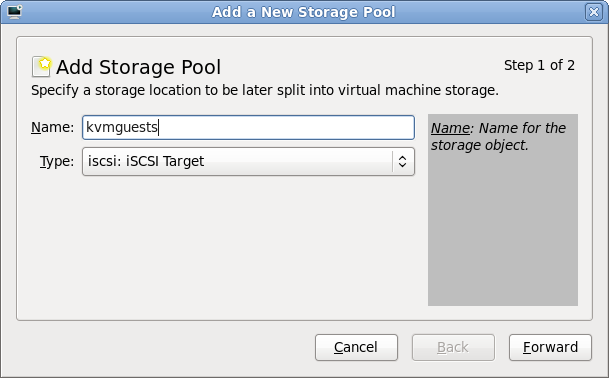

Adding a storage pool

The first stage of setting up a storage pool is to provide a short name and select the type of storage to be accessed. For sake of consistency this example gives the storage pool the same name as the iSCSI target previously configured on the QNAP.

Storage pool creation

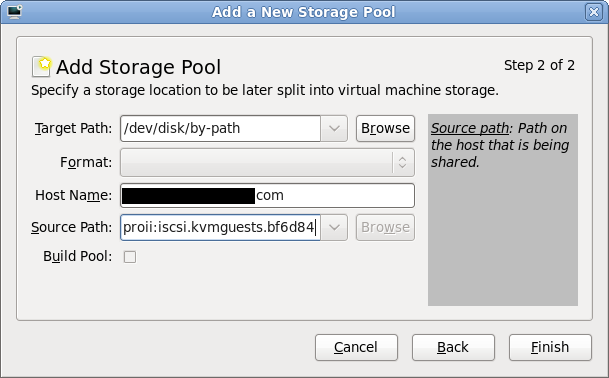

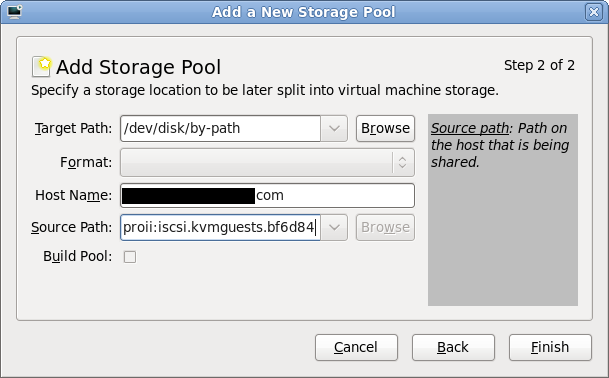

Entering iSCSI parameters

The “Target path” determines how libvirt will expose device paths for the pool. Paths like /dev/sda, /dev/sdb, etc are not a good choice because they are not stable across reboots, or across machines in a cluster, the names are assigned on a first come, first served basis by the kernel. Thus virt-manager helpfully suggests that you use paths under “/dev/disk/by-path”. This results in a naming scheme that will be stable across all machines. The “Host Name” is simply the fully qualified DNS name of the iSCSI server. Finally the “Source Path” is that adorable IQN seen earlier when creating the iSCSI target (“iqn.2004-04.com.qnap:ts-439proii:iscsi.kvmguests.bf6d84“)

iSCSI storage pool parameters

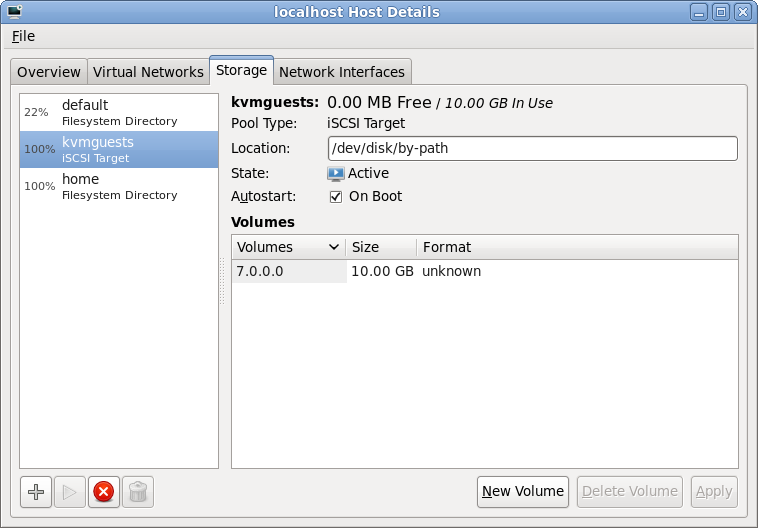

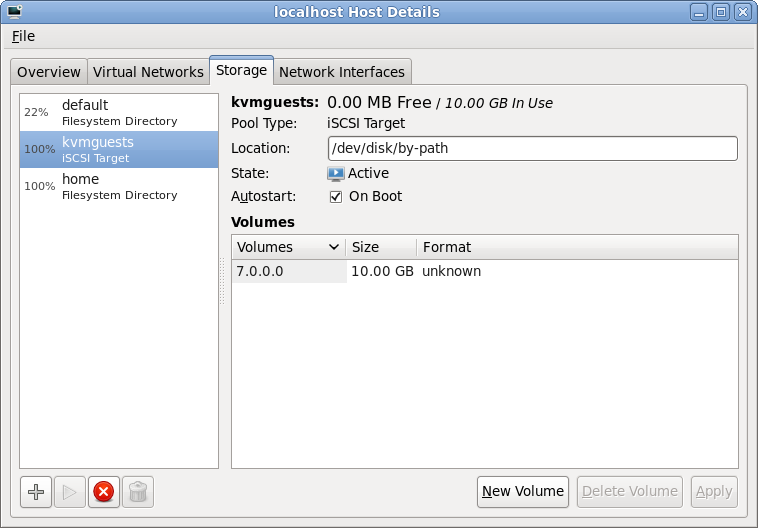

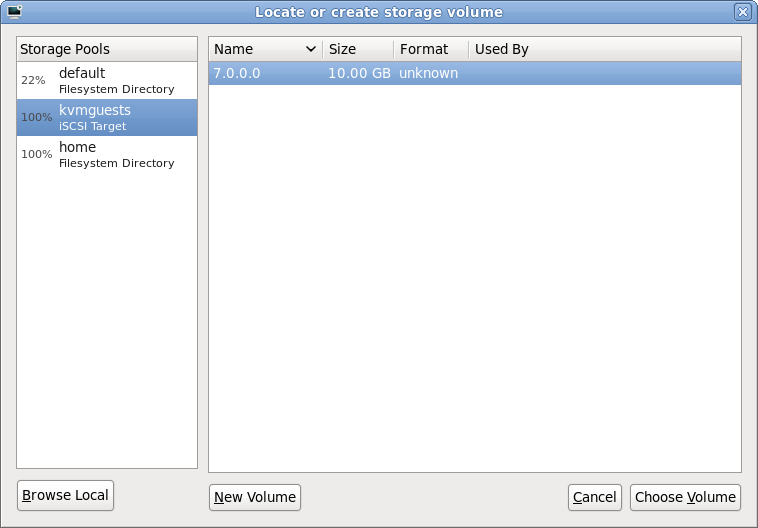

Browsing iSCSI LUNs

If all went to plan, libvirt connected to the iSCSI server and imported the LUNs associated with the iSCSI target specified in the wizard. Selecting the new storage pool, it should be possible to see the LUNs and their sizes. These steps can be repeated on other hosts if the intention is to migrate guests between machines. Obviously care should be taken to not run the same VM on two machines at once though. Ideally use clustering software to protect against this scenario.

iSCSI LUN browsing

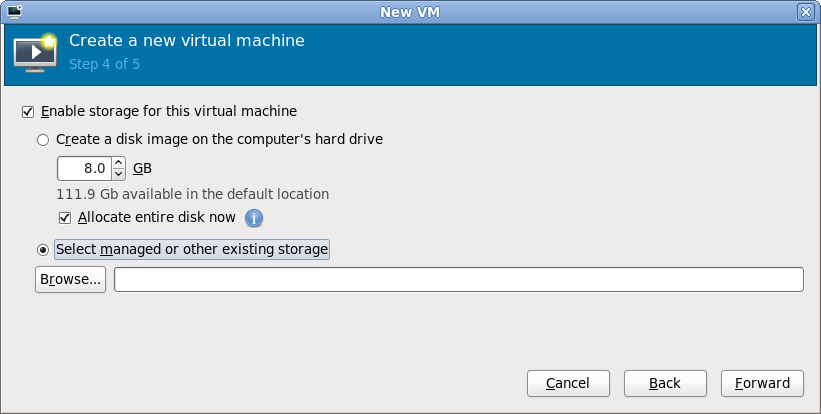

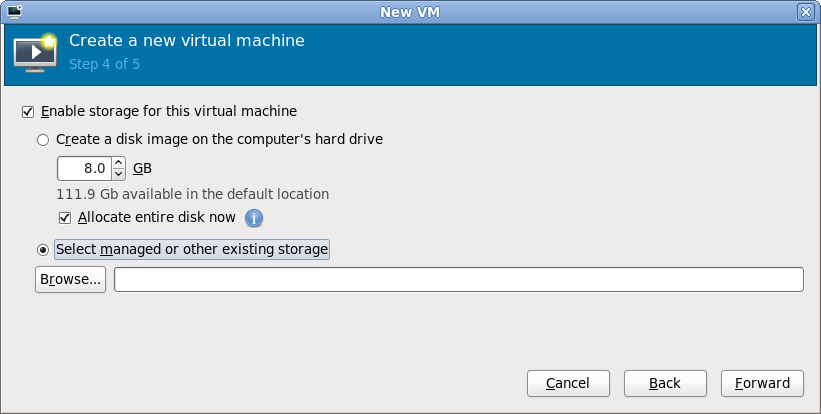

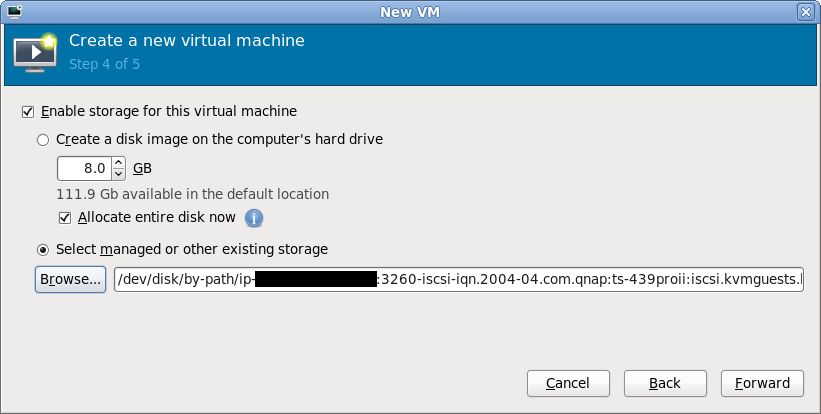

Selecting storage for a new VM

With the iSCSI storage pool configured in virt-manager, it is now possible to create a guest. After breezing through the first couple of steps in the “New VM wizard”, it is time to specify what storage to use for the new guest. By default virt-manager will allocate storage from the local filesystem. This isn’t what we want now, so go for the “Select managed or other existing storage” option and hit the “Browse” button

New guest storage selection

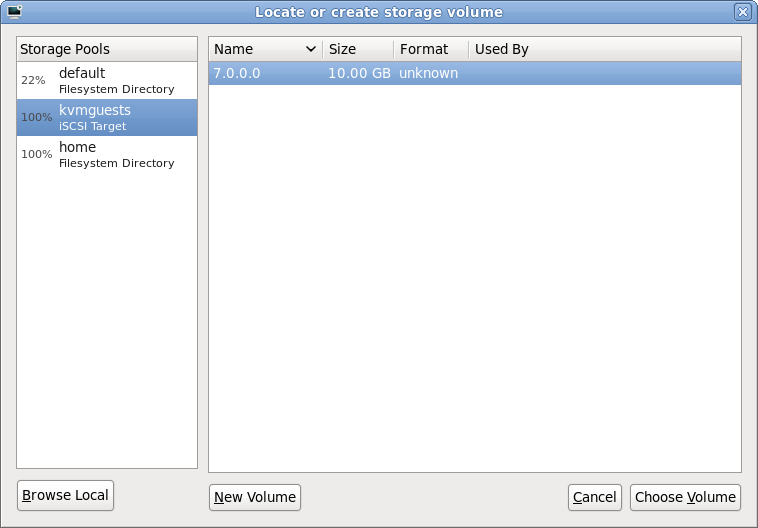

Browsing iSCSI LUNs for the new VM

The dialog that has appeared shows all the storage pools this libvirt connection knows about. This should match the pools seen a short while ago when configuring the iSCSI storage pool. It should be fairly obvious what todo at this point, select the iSCSI storage pool and the desired LUN (volume) within it and press “Choose Volume”

iSCSI LUN selection

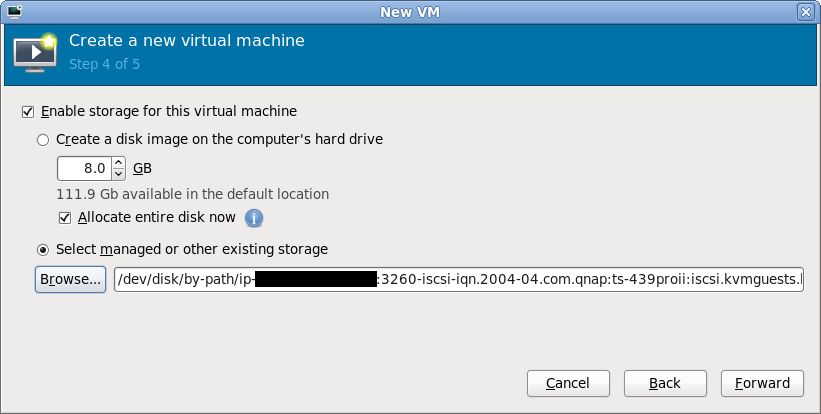

Continuing the new VM wizard

After choosing an iSCSI LUN, its path should now be displayed. It’ll be a rather long and scary looking path under /dev/disk/by-path, don’t stare at it for too long. Just continue with the rest of the new VM wizard in the normal manner and the guest will shortly be up & running using iSCSI storage for its virtual disk.

New guest storage path

Final words

Hopefully this quick walkthrough has shown that provisioning KVM guests on Fedora 12 using iSCSI is as easy as 1..2..3.. The hard bit is probably going to be on your iSCSI server if you don’t have a NAS with a nice administrative interface like the QNAP’s. The libvirt storage pool management architecture includes support for actually constructing storage pools & allocating LUNs. For a LVM storage pool libvirt would do this using vgcreate and lvcreate respectively. The iSCSI protocol standard does not support these kinds of operations but many vendors provide ways todo this with custom APIs, for example, Dell EqualLogic iSCSI arrays have a SSH command shell that can be used for LUN creation/deletion. It would be desirable to add support for these vendor specific APIs for LUN creation/deletion in libvirt. It could even be possible to support iSCSI target creation from libvirt if suitable APIs were available. This would dramatically simplify the steps required to provision new guests on iSCSI by enabling everything to be done from virt-manager, without the need to touch the NAS admin interfaces.